(Caption: OpenAI GPT-OSS represents an important milestone in AI’s evolution from closed to hybrid open systems. Shot at Lac de Neuchâtel lakeside, Switzerland. Image source: Ernest.)

(Caption: OpenAI GPT-OSS represents an important milestone in AI’s evolution from closed to hybrid open systems. Shot at Lac de Neuchâtel lakeside, Switzerland. Image source: Ernest.)

tl;dr

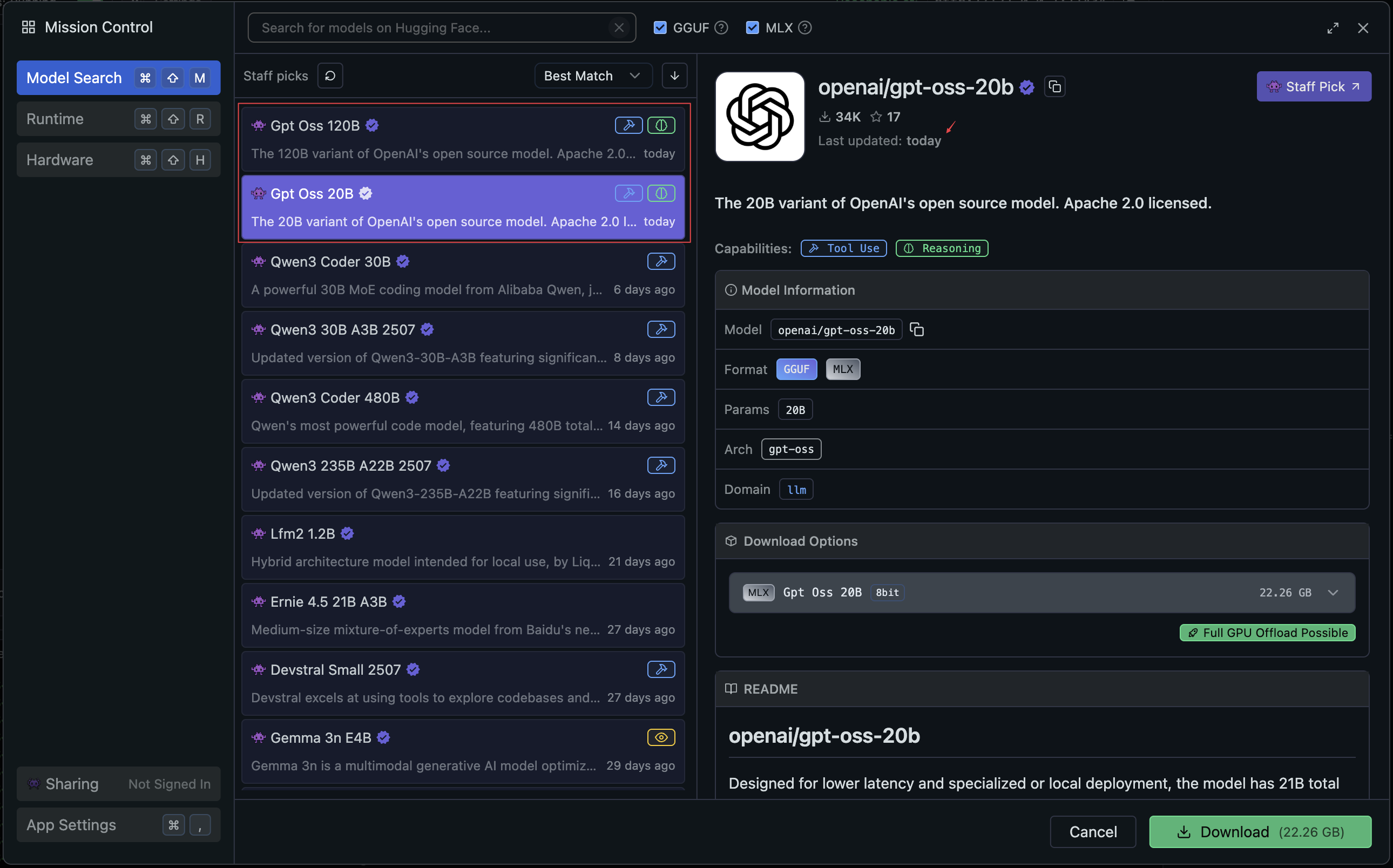

OpenAI has released two open-weight reasoning-oriented language model families: gpt-oss-120b and gpt-oss-20b. They can run locally or on various cloud/third-party inference platforms, licensed under Apache-2.0 with an additional usage policy 1; OpenAI emphasizes comprehensive safety testing against misuse.

- Performance: gpt-oss-120b outperforms o4-mini on math competitions (AIME) and health conversations (HealthBench), achieving 90% on MMLU; even the smaller model gpt-oss-20b released this time excels in math competitions, achieving 98.7% on AIME 2025 2.

- Hardware-friendly: Through MXFP4 quantization technology, gpt-oss-120b requires 80~96 GB VRAM, while gpt-oss-20b needs only 12 GB VRAM for consumer hardware deployment.

- Innovative format: Supports Harmony response format, a multi-channel message format that carries reasoning processes and tool calls.

- Complete reasoning: Provides Chain-of-Thought access for debugging and trust building 3.

- Ecosystem integration: Deep integration with Hugging Face, vLLM, Ollama, LM Studio, Amazon Bedrock, Databricks and other platforms, with models and platform managed deployments released simultaneously 4.

- Security assurance: Systematic safety testing completed through Preparedness Framework 5, with maliciously fine-tuned versions not reaching high-risk capability thresholds.

Contents

1. OpenAI’s Significant Strategic Shift in Open Source

Since GPT-2 in 2019, OpenAI has maintained a closed model strategy, providing services through APIs. However, the release of gpt-oss 4 signals a strategic pivot: from purely closed to hybrid approach.

1.1 Background Context

In the open source AI ecosystem, Meta’s Llama series (since Feb 2023) and Google’s Gemma series (since Feb 2024) have already established strong developer communities. OpenAI may have realized that relying solely on closed APIs would result in losing certain important market shares, particularly in market environments where enterprises increasingly demand strict data privacy and data isolation requirements.

The launch of GPT-OSS allows OpenAI to:

- Expand influence within developer communities

- Meet enterprise local deployment and on-premise product deployment needs

- Form virtuous cycles with open source ecosystems

- Establish “entry learning paths” for their flagship closed models (GPT-4.1, GPT-4o, o3)

1.2 Business Considerations of Hybrid Strategy

This hybrid strategy allows OpenAI to perform differentiated product positioning across different market segments:

- Open-weight models (e.g. GPT-OSS)

- Attract developers, educational institutions, SMBs

- Simple-dimensional application scenarios

- Closed API models (e.g. GPT-4.1, GPT-4o, o3)

- Serve large enterprises

- Application scenarios requiring multimodal capabilities

- Professional services:

- Customized solutions for specific industries

For a complete list of currently available OpenAI models, please refer to the official platform documentation: Models - OpenAI API.

2. GPT-OSS Core Technology and Features

GPT-OSS is not merely an “open source version” of OpenAI models, but architecture specifically optimized for reasoning and agentic applications.

2.1 Model Architecture: Mixture-of-Experts (MoE)

GPT-OSS uses Transformer architecture enhanced by Mixture-of-Experts 6, a technology that can significantly reduce inference costs while maintaining high performance:

- gpt-oss-120b

- Large-scale parameter configuration

- Uses MoE architecture to distribute computational load

- gpt-oss-20b

- Medium-scale parameter configuration

- Suitable for broader deployment scenarios

Specific parameter counts and expert configuration details can be found on the Hugging Face model pages 3 7.

This design allows the model to dynamically select the most relevant “experts” based on input content, maintaining large model capabilities while controlling computational costs.

2.2 MXFP4 Quantization Technology

MXFP4 (Mixed-Precision 4-bit Floating Point) is a new quantization format developed by NVIDIA, specifically optimized for inference scenarios:

- Memory savings: Reduces memory requirements by approximately 50-75% compared to traditional 16-bit

- Hardware support: Native support on NVIDIA Hopper (H100) architecture

- Precision preservation: Better maintains model accuracy compared to integer quantization

Through MXFP4 quantization technology:

- gpt-oss-120b

- VRAM requirement approximately 80GB, suitable for enterprise GPU deployment e.g. NVIDIA Hopper (H100)

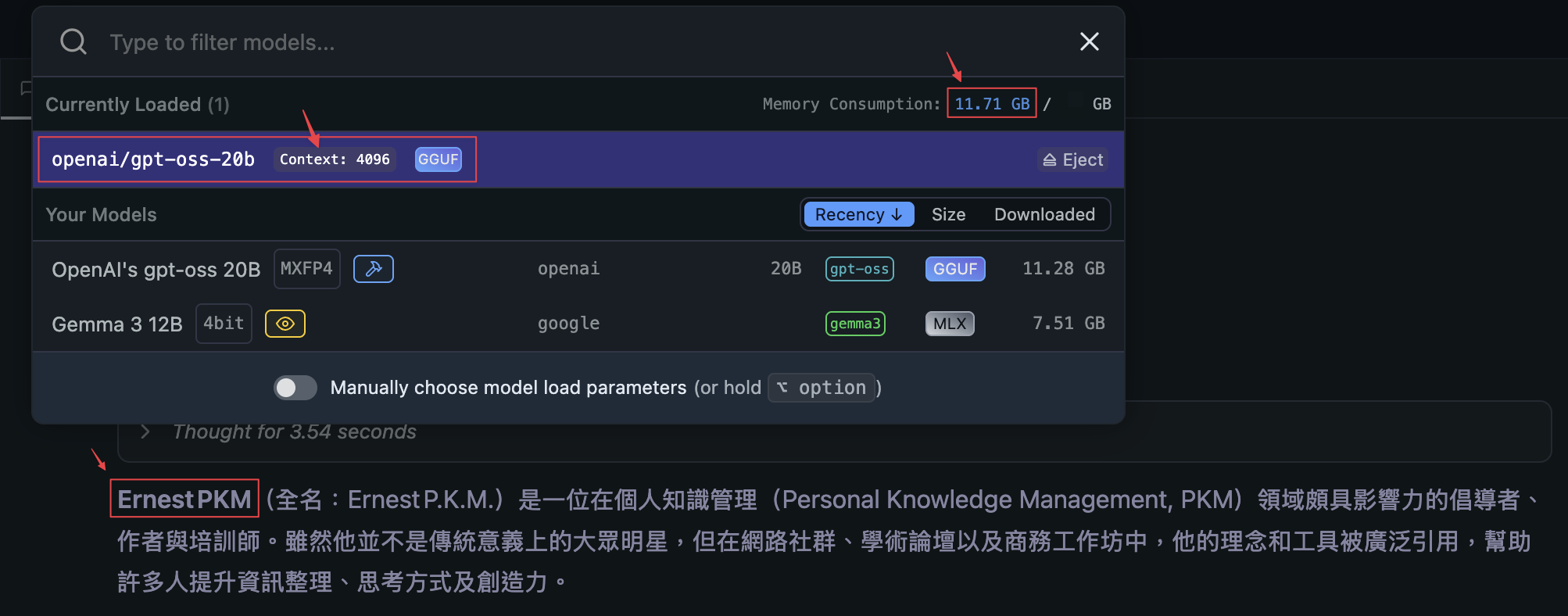

- On my Macbook Pro M4 Pro, using LM Studio, I see the GGUF version (with MXFP4) file size is approximately 63.39 GB

- MLX version (8-bit) is approximately 124.20 GB

- gpt-oss-20b

- VRAM requirement reduced from theoretical 42GB to actual 16GB, enabling it to run on consumer GPUs

- On my Macbook Pro M4 Pro, using LM Studio, I see the GGUF version (with MXFP4) file size is approximately 12.11 GB

- MLX version (8-bit) is approximately 22.26 GB

Actual memory (RAM, VRAM) requirements will vary depending on your environment configuration. Looking forward to more real-world testing results.

2.3 Harmony Response Format

Harmony 8 is a new response format designed by OpenAI for GPT-OSS, attempting to solve the inadequacies of traditional dialogue formats in complex reasoning and tool usage scenarios:

<|start|>role:assistant

<|message|>

I need to analyze multiple aspects of this problem...

<|end|>

<|start|>role:assistant,type:reasoning

<|message|>

[Chain-of-Thought reasoning process]

Let me analyze step by step:

1. First identify key variables...

2. Then evaluate various possibilities...

<|end|>

<|start|>role:assistant,type:tool_use

<|message|>

{"function_name": "python_execute", "parameters": {"code": "..."}}

<|end|>

<|start|>role:assistant

<|message|>

Based on the above analysis, my conclusion is...

<|end|>

Harmony’s advantages:

- Channel parallelism: Simultaneously present reasoning, tool usage, and final answers

- Structural transparency: Clear distinction between different types of model outputs

- Debug-friendly: Developers can fully inspect the reasoning process

2.4 Complete Access to Chain-of-Thought

Unlike many “black box” models, GPT-OSS provides complete Chain-of-Thought access.

- Transparent reasoning: Developers can inspect each step of the model’s thinking process

- Debugging capability: Quickly identify reasoning errors or biases

- Trust building: Build confidence in model outputs through observing reasoning processes

- Learning opportunities: Researchers can deeply understand large model reasoning patterns

Important note: OpenAI advises that Chain-of-Thought should not be directly displayed to end users, as it may contain hallucinations, harmful content, or inappropriate information 3.

2.5 Tool Usage and Agent Capabilities

GPT-OSS was designed with Agentic AI requirements in mind, featuring built-in tool usage (tool use; function calling) capabilities:

- Web browsing: Ability to search (web search) and fetch (web fetch) web information

- Code execution: Native support for Python code execution and debugging (aside: for other non-mainstream programming languages, consider using intermediate languages for translation assistance)

- Structured output: Support for JSON Schema-constrained output formats

- Multi-step planning: Ability to decompose complex tasks and execute them step by step

The challenge here, compared to the human brain, is that “memory storage” and “hands-on execution” operate using different brain regions. You can refer to the reference diagram I drew in my COSCUP 2021 presentation on Building Personal Knowledge Systems.

3. System Architecture and Ecosystem Integration

GPT-OSS’s true value lies not just in the model itself, but in the comprehensive ecosystem integration system OpenAI has built from model to deployment.

3.1 Hugging Face: Model Publishing and Community Platform

Hugging Face serves as the primary publishing platform for GPT-OSS, providing:

- Model downloads: Complete weight files and configurations

- Quick start: Pre-configured

transformersintegration code - Community discussion: Developer experience sharing and Q&A

- Model cards: Detailed technical specifications and usage guides

Developers can begin with simple code:

from transformers import AutoModelForCausalLM, AutoTokenizer

model = AutoModelForCausalLM.from_pretrained("openai/gpt-oss-20b")

tokenizer = AutoTokenizer.from_pretrained("openai/gpt-oss-20b")

3.2 vLLM: High-Performance Inference Engine

vLLM is an inference engine optimized for large language models, supporting GPT-OSS:

Core advantages:

- PagedAttention: 4x improvement in memory usage efficiency

- Continuous batching: Over 20x throughput improvement

- OpenAI-compatible API: Seamless replacement of existing integrations

Deployment example:

# Start vLLM service

python -m vllm.entrypoints.openai.api_server \

--model openai/gpt-oss-20b \

--quantization MXFP4

# Use OpenAI-compatible API

curl http://localhost:8000/v1/chat/completions \

-H "Content-Type: application/json" \

-d '{

"model": "openai/gpt-oss-20b",

"messages": [{"role": "user", "content": "Explain quantum computing"}]

}'

3.3 LM Studio: Graphical Local Deployment Tool

LM Studio is a graphical local AI model management tool providing intuitive GPT-OSS deployment experience for non-technical users:

- Visual model management: Drag-and-drop model installation and switching

- Built-in chat interface: Test models without additional programming

- Hardware auto-detection: Automatic GPU configuration identification and performance optimization

- Multi-format support: GGUF, GGML and other quantization formats

Previously, when comparing LM Studio and Ollama performance on Gemma3 on Apple Silicon, LM Studio performed ~26%-30% better in t/s. We can observe GPT-OSS performance for a while.

(OpenAI GPT-OSS was released on August 6, 2025 at dawn, and GPT-OSS models were available for download in LM Studio that same day.)

(OpenAI GPT-OSS was released on August 6, 2025 at dawn, and GPT-OSS models were available for download in LM Studio that same day.)

(Using LM Studio to load OpenAI GPT-OSS 20B, it actually recognizes the Ernest PKM concept!)

(Using LM Studio to load OpenAI GPT-OSS 20B, it actually recognizes the Ernest PKM concept!)

Here’s a sample of LM Studio running OpenAI GPT-OSS 20B GGUF:

- Very satisfying speed:

43.97 tok/sec • 1312 tokens • 0.40s to first token - I asked it in Traditional Chinese:

請推論 OpenAI 為什麼會推出 gpt-oss 這樣的開源權重模型?背後有何策略?(Please reason why OpenAI would launch open-weight models like gpt-oss? What’s the strategy behind it?) - It responded as follows:

<|channel|>analysis<|message|>Need to explain motivations: openness, research community, trust, compliance, competition, safety, regulatory, etc. Provide strategic angle: brand, ecosystem, data collection, policy testing, etc.<|end|>

<|start|>assistant<|channel|>final<|message|>## Why Would OpenAI Launch Open-Weight Models Like "gpt-oss"?

| Reason | Strategic Background |

|--------|---------------------|

| **1️⃣ Community Promotion & Reputation Building** | - Allow researchers, students, and industry engineers to directly use, experiment with, and improve models, forming a spontaneous "user community"<br>- Open weights can generate numerous derivative works (papers, tools, APIs, etc.), naturally promoting OpenAI's technology to more people |

| **2️⃣ Transparency & Trust** | - Large models face significant concerns regarding safety, bias, and misuse; open weights demonstrate "I can actually make these parameters public"<br>- Through community scrutiny, OpenAI can discover and fix potential issues faster |

| **3️⃣ Testing & Optimization** | - Beyond formal commercial products (like ChatGPT), open models allow researchers to test performance on different hardware and frameworks<br>- Collect data from various usage scenarios through community feedback to improve future versions |

| **4️⃣ Regulatory & Policy** | - Some countries/regions require AI models to be "verifiable" or "auditable." Open weights meet such compliance requirements, avoiding bans due to closed models<br>- Can serve as a tool for OpenAI to demonstrate safety commitments to government agencies |

| **5️⃣ Ecosystem & Commercialization** | - Through open source, attract third-party developers to create plugins, fine-tuned versions, or specialized applications (medical, legal)<br>- When these derivative products succeed, they can be pushed to market, forming a "platform + ecosystem" revenue model |

| **6️⃣ Competitive Response** | - Competition in large models is fierce. Keeping only closed weights might lead to other companies "copying" or developing similar technologies; conversely, opening up can make OpenAI the "foundational research standard," building other companies' research on top of theirs |

| **7️⃣ Talent Attraction & Retention** | - Open source projects are powerful recruitment tools for attracting top researchers. Having opportunities to participate in developing and improving GPT-OSS is very attractive to talent |

---

## Specific Strategy Analysis

### 1. **Bidirectional Gateway (Open ↔ Closed)**

| Direction | Content |

|-----------|---------|

| **From Open → Closed** | New methods and optimization techniques from community research can be quickly integrated into commercial models.<br>Examples: More efficient fine-tuning techniques, sparse attention, quantization strategies, etc. |

| **From Closed → Open** | Let users experience "advanced AI even without payment," lowering barriers to commercial versions and increasing brand loyalty |

### 2. **Governance & Safety Rehearsal Ground**

- Open weights as "testing platform": Anyone can run models locally or in cloud and experiment with defense mechanisms (adversarial samples, input filtering)<br>

- This not only discovers vulnerabilities but allows OpenAI's safety team to evaluate risks across different hardware/frameworks in advance

### 3. **Policy Advocacy & Public Relations**

- In AI ethics and regulatory discussions, OpenAI can use "we've already made weights public" as evidence of transparency responsibility<br>

- This helps gain more persuasive power in international standard-setting

### 4. **Diversified Revenue Models**

| Model | Specific Examples |

|-------|-------------------|

| **Value-Added Services** | Provide premium fine-tuning, deployment tools, datasets for open models |

| **Platform Partnerships** | Collaborate with cloud providers (AWS, Azure, GCP) to offer GPT-OSS as foundational services |

| **Project Consulting** | Enterprise customized development (customer service bots, translation systems) |

---

## Potential Risks & Responses

1. **Misuse Risk**

*Strategy*: Include "user agreements" or "ethical standards" when releasing weights, and provide safety audit tools

2. **Intellectual Property Loss**

*Strategy*: Protect core algorithms through licensing (e.g., Apache 2.0 + commercial terms) while opening weights as "reference implementation"

3. **Competitor Copying**

*Strategy*: Release models first, then add patented improvements in commercial products; maintain API closure to ensure core value isn't completely replicated

---

## Summary

OpenAI's core motivation for launching GPT-OSS is **"opening technology while building sustainable ecosystem and business models"**

- **Openness** enables rapid community iteration, improving safety and transparency

- **Closure** still maintains advantages in commercialization, compliance, and core optimization

This "dual-sided strategy" not only consolidates OpenAI's market leadership but provides a reference model for healthy AI industry development.

3.4 Ollama: Simplified Local Deployment Solution

Ollama makes GPT-OSS local deployment commands simple 9, and recently launched Ollama App 10 (on macOS, Windows) with additional app UI. While simplicity is good, remember to compare performance to avoid saving installation time but wasting electricity costs.

# One-click install and run

ollama run gpt-oss:20b

# Or choose larger model

ollama run gpt-oss:120b

3.5 Deep AWS Ecosystem Integration

According to the official AWS blog 11, OpenAI GPT-OSS models were fully integrated into the AWS ecosystem upon release, providing complete cloud AI solutions for enterprises.

3.5.1 Amazon Bedrock Support

Amazon Bedrock now supports the GPT-OSS model family, providing:

Enhanced technical specifications:

- 128K context window: Compared to standard configurations of other open-weight models, GPT-OSS on Bedrock supports ultra-long context windows of 128K tokens

- Adjustable reasoning levels: Supports low/medium/high reasoning intensity settings, allowing users to balance performance and cost flexibly

- OpenAI-compatible endpoints: Provides fully OpenAI API-compatible interfaces, minimizing migration costs

3.5.2 SageMaker JumpStart Integration

Amazon SageMaker JumpStart provides one-click GPT-OSS deployment solutions:

Quick deployment:

# SageMaker JumpStart deployment example

import boto3

from sagemaker.jumpstart.model import JumpStartModel

model = JumpStartModel(

model_id="huggingface-llm-gpt-oss-20b",

role=execution_role,

instance_type="ml.g5.2xlarge"

)

# One-click deploy to SageMaker endpoint

predictor = model.deploy(

initial_instance_count=1,

accept_eula=True

)

# Call inference

response = predictor.predict({

"inputs": "Analyze key factors in enterprise digital transformation",

"parameters": {

"max_new_tokens": 1024,

"temperature": 0.7,

"reasoning_level": "medium" # Adjustable reasoning level

}

})

AWS also integrates the Strands Agents framework 12, an open-source AI agents development kit released by AWS in May 2025, supporting multiple model providers (including OpenAI, Anthropic, Meta, etc.).

3.6 Other Enterprise-Level Integrations

3.6.1 Databricks

- Native integration into Databricks platform

- Seamless connection to enterprise data lakes

- Support for large-scale batch inference

- Compliance with enterprise security and regulatory requirements

4. Performance Benchmarks and Comparative Analysis

GPT-OSS demonstrates strong performance across multiple authoritative benchmarks, particularly in reasoning-intensive tasks.

4.1 Core Performance Data

The following performance data is cited from OpenAI’s official release page 2 and Hugging Face model pages 3 7.

Reasoning and Academic Capabilities

| Benchmark | gpt-oss-120b | gpt-oss-20b | o3 | o4-mini | o3-mini | Description |

|---|---|---|---|---|---|---|

| MMLU | 90.0% | 85.3% | 93.4% | 93.0% | 87.0% | Massive Multitask Language Understanding |

| GPQA Diamond | 80.1% | 71.5% | 83.3% | 81.4% | 77.0% | Graduate-level science Q&A (without tools) |

| HLE | 19.0% | 17.3% | 24.9% | 17.7% | 13.4% | Humanity’s Last Exam (with tools) |

Mathematical Competition Capabilities

| Benchmark | gpt-oss-120b | gpt-oss-20b | o3 | o4-mini | o3-mini | Description |

|---|---|---|---|---|---|---|

| AIME 2024 | 96.6% | 96.0% | 95.2% | 98.7% | 87.3% | Math competition 2024 (with tools) |

| AIME 2025 | 97.9% | 98.7% | 98.4% | 99.5% | 86.5% | Math competition 2025 (with tools) |

Programming Capabilities

| Benchmark | gpt-oss-120b | gpt-oss-20b | o3 | o4-mini | o3-mini | Description |

|---|---|---|---|---|---|---|

| Codeforces | 2622 Elo | 2516 Elo | 2706 Elo | 2719 Elo | 2073 Elo | Competitive programming (with tools) |

Professional Application Capabilities

| Benchmark | gpt-oss-120b | gpt-oss-20b | o3 | o4-mini | o3-mini | Description |

|---|---|---|---|---|---|---|

| HealthBench | 57.6% | 42.5% | 59.8% | 50.1% | 37.8% | Real health conversation scenarios |

| Tau-Bench Retail | 67.8% | 54.8% | 70.4% | 65.6% | - | Tool calling and function usage |

4.2 Comparison with Open Source Models

| Feature Aspect | GPT-OSS | Llama 3.1 | Gemma 3 | DeepSeek-R1 |

|---|---|---|---|---|

| License | Apache 2.0 | Llama Community License (>700M MAU requires licensing) | Google custom license (commercial use allowed with policy restrictions) | MIT |

| Reasoning Transparency | Complete CoT | None | None | Partial CoT |

| Tool Usage | Native support | Requires fine-tuning | Native support | Native support |

| Deployment Ecosystem | Wide support | Wide support | Wide support | Limited support |

| Hardware Requirements | 16GB (20B) / 80GB (120B) | 16GB (8B) / 45GB (70B) / 243GB (405B) | 1.5GB (1B) / 6.4GB (4B) / 20GB (12B) / 46GB (27B) | 48GB (70B) / 480GB (671B) |

| Reasoning Capability | Specifically optimized for reasoning | General capability | General capability | Can reason |

5. Security and Risk Assessment

OpenAI has conducted rigorous security assessments of GPT-OSS, representing an important innovation in the open-weight model domain.

5.1 Preparedness Framework

The Preparedness Framework is OpenAI’s internal catastrophic risk assessment system, covering four core risk categories:

- Cybersecurity

- CBRN (Chemical, Biological, Radiological, Nuclear)

- Persuasion

- Model Autonomy

Each category has four risk levels: “low, medium, high, critical,” and models reaching “high” level will not be deployed.

5.2 Malicious Fine-tuning Risk Testing

For open-weight models, malicious fine-tuning is an important risk consideration. Attackers might:

- Use harmful data to fine-tune models

- Break model safety guardrails

- Create model versions capable of generating dangerous content

OpenAI’s testing methodology:

- Actively conduct “red team exercises,” simulating attacker behavior

- Use specialized biology and cybersecurity datasets for malicious fine-tuning

- Evaluate actual dangerous capabilities of fine-tuned models

Test results: Even after specifically designed malicious fine-tuning, gpt-oss-120b failed to reach “high-risk” levels, validating the model’s inherent safety 2.

5.3 Usage Policy and Governance Mechanisms

In addition to Apache 2.0 licensing, GPT-OSS comes with a Usage Policy. This can only prevent gentlemen (probably).

6. Real-World Application Scenario Analysis

Based on deep analysis of GPT-OSS technical characteristics, we can identify multiple highly practical application scenarios.

6.1 Enterprise-Level Application Scenarios

6.1.1 Local Data Analysis and BI

Scenario description: Financial institutions need to analyze sensitive customer data but cannot send data to external APIs.

GPT-OSS solution:

- Deploy gpt-oss-120b in intranet environment

- Use Chain-of-Thought capabilities for complex financial analysis

- Directly query internal databases through tool usage functionality

- Generate detailed analysis reports and recommendations

Technical advantages:

- Data stays within intranet, meeting financial industry compliance requirements

- Apache 2.0 license allows commercial use

- Transparent reasoning process, convenient for auditing and verification

6.1.2 Customer Service and Support Automation

Scenario description: E-commerce platforms need to handle large volumes of customer service inquiries, particularly complex technical questions and return processes.

GPT-OSS solution:

# Customer service bot example

def handle_customer_query(query, customer_context):

# Use Harmony format for multi-step reasoning

reasoning_prompt = f"""

<|start|>role:system

<|message|>

You are a professional customer service representative who needs to:

1. Analyze the core needs of customer problems

2. Query relevant policies and product information

3. Provide accurate and humanized solutions

<|end|>

Customer information: {customer_context}

Question: {query}

"""

response = gpt_oss_model.generate(reasoning_prompt, tools=["database_query", "policy_lookup"])

return response

6.1.3 Code Review and Refactoring

Scenario description: Software development teams need to conduct quality reviews and automated refactoring of large codebases.

GPT-OSS solution:

- Analyze code structure and design patterns

- Identify potential security vulnerabilities and performance issues

- Recommend refactoring solutions and generate improved code

- Provide detailed change explanations and testing recommendations

6.2 Developer and Research Scenarios

6.2.1 AI Research and Experimentation

Research value:

- Reasoning mechanism research: Through Chain-of-Thought analysis, study large model reasoning patterns

- Alignment technology development: Conduct safety alignment technology research based on open weights

- Fine-tuning experiments: Explore fine-tuning strategies for specific domains

Experimental setup:

# Research environment setup

git clone https://github.com/openai/gpt-oss

cd gpt-oss

# Load model for reasoning analysis

python research/analyze_reasoning.py \

--model gpt-oss-20b \

--dataset reasoning_benchmark \

--output reasoning_analysis.json

6.2.2 Educational and Training Applications

Teaching scenarios:

- Programming education: Students can observe AI’s programming thought processes

- Logic reasoning training: Learn structured thinking through Chain-of-Thought

- Multilingual learning: Utilize tool usage capabilities for language practice

Teaching advantages:

- No API key restrictions, suitable for large-scale educational deployment

- Transparent reasoning process, helpful for learning and understanding

- Local deployment ensures learning data privacy

6.2.3 Prototype Development and MVP Construction

Development workflow:

6.3 Emerging Application Patterns

6.3.1 Multi-Agent System Collaboration

GPT-OSS’s tool usage capabilities make it an excellent candidate for multi-agent systems:

class ResearchAgent:

def __init__(self):

self.model = GPTOSS("gpt-oss-20b")

self.tools = ["web_search", "paper_analysis", "data_visualization"]

async def research_topic(self, topic):

# Use Chain-of-Thought to formulate research strategy

strategy = await self.model.plan_research(topic)

# Execute multiple research tasks in parallel

results = await asyncio.gather(*[

self.search_papers(strategy.keywords),

self.analyze_trends(strategy.time_range),

self.gather_statistics(strategy.data_sources)

])

# Integrate research results

report = await self.model.synthesize_report(results)

return report

6.3.2 Edge Computing and IoT Integration

gpt-oss-20b’s 16GB memory requirement enables deployment on edge devices:

Application scenarios:

- Smart factories: Real-time analysis of production data without cloud connectivity

- Smart cities: Local processing of traffic and environmental data

- Medical devices: Offline medical image analysis and diagnostic recommendations

Technical architecture:

Edge Device (32GB RAM) → GPT-OSS-20B → Local Decision Making

↓

Periodic Batch Sync → Cloud Management System → Model Updates

Bottomline

GPT-OSS is not merely a product release from OpenAI, but represents the evolution of maturity across the entire AI industry. When AI capabilities are no longer exclusive to a few companies, when reasoning processes become transparent and auditable, when deployment choices become diversified, we are witnessing the historical moment when AI technology transforms from “magic” to “tool.”

For technical leaders, the key question now is not “whether to use GPT-OSS,” but “how to most effectively integrate GPT-OSS into existing tech stacks and workflows.” Flexible utilization of closed models and open-weight models, fine-tuning and optimizing organizational workflows, is more likely to accumulate greater output in AI implementation and integration.

References

Official Documentation

Technical Documentation and Guides

- OpenAI Harmony Response Format - OpenAI Cookbook

- How to run gpt-oss with vLLM - OpenAI Cookbook

- How to run gpt-oss with Transformers - OpenAI Cookbook

- How to run gpt-oss locally with Ollama - OpenAI Cookbook

Ecosystem Integration

- Welcome GPT OSS, the new open-source model family from OpenAI! - Hugging Face

- vLLM Now Supports gpt-oss - vLLM Blog

- Introducing OpenAI’s New Open Models on Databricks

- gpt-oss - Ollama Model Library

Technical Specifications and Benchmarks

Media Coverage and Analysis

- OpenAI launches two ‘open’ AI reasoning models - TechCrunch

- OpenAI releases a free GPT model that can run on your laptop - The Verge

- OpenAI Just Released Its First Open-Weight Models Since GPT-2 - WIRED

Security and Risk Assessment

- Safety Risks from Customizing Foundation Models via Fine-tuning - Stanford HAI

- AI Red Teaming Best Practices - HiddenLayer